Vaccel

If you’ve ever tried to debug a PyTorch program on an ARM64 system using

Valgrind, you might have stumbled on something really

odd: “Why does it take so long?”. And if you’re like us, you would probably try

to run it locally, on a Raspberry pi, to see what’s going on… And the madness

begins!

That’s what we thought when setting up a BERT-based hate speech classifier.

This was part of a broader experiment using

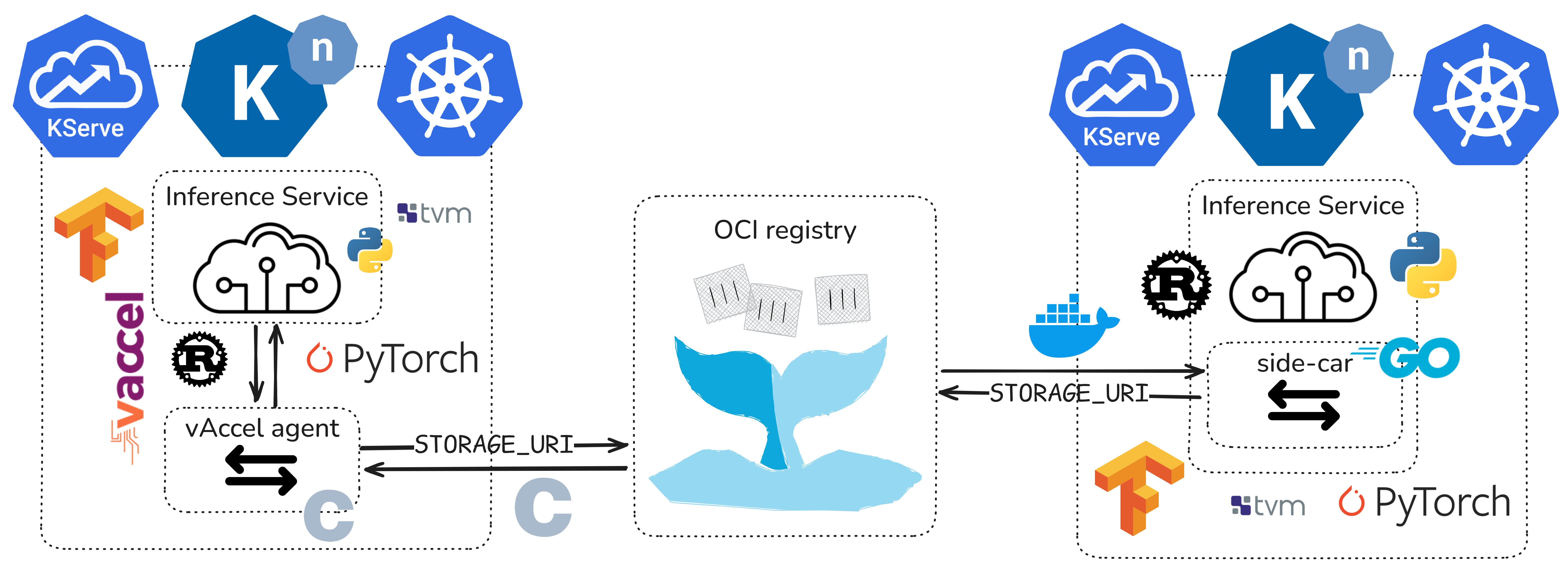

vAccel, our hardware acceleration

abstraction for AI inference across the Cloud-Edge-IoT continuum.

To facilitate the use of vAccel, we provide bindings for popular languages,

apart from C. Essentially, the vAccel C API can be called from any language

that interacts with C libraries. Building on this, we are thrilled to present

support for Go.

Following up on a successful VM boot on a Jetson AGX Orin, we continue exploring the capabilities of this edge device, focusing on the cloud-native aspect of application deployment.

In 2022, NVIDIA released the Jetson Orin modules, specifically designed for extreme computation at the Edge. The NVIDIA Jetson AGX Orin modules deliver up to 275 TOPS of AI performance with power configurable between 15W and 60W.